with electronics everywhere, why have robots not found their way into the home?

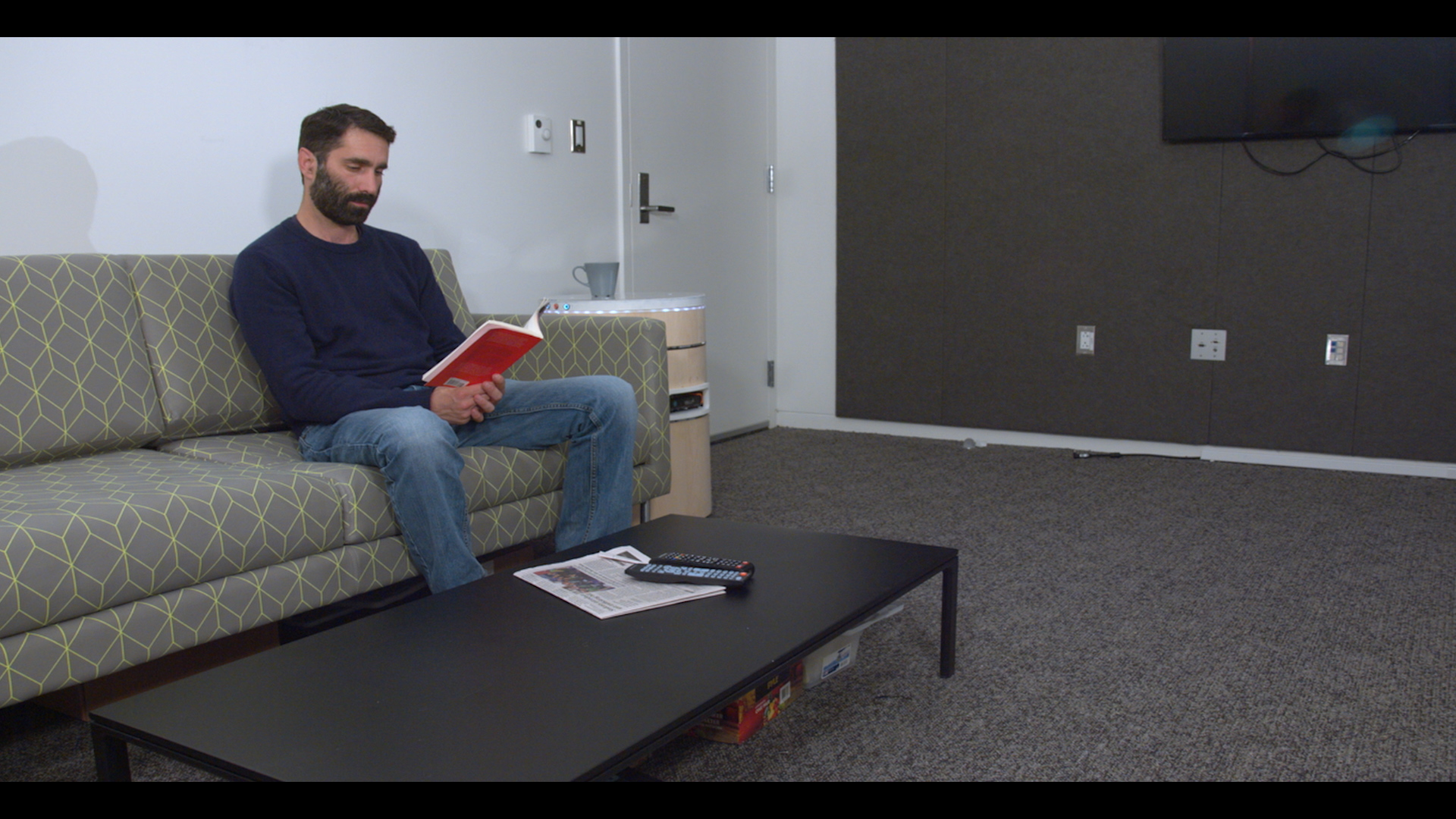

We think the answer to that question is that we associate robots with being metallic, clunky, dangerous and unfitting for a home. TableBot, or Tbo, is the first in a category of robots we call situated robots.

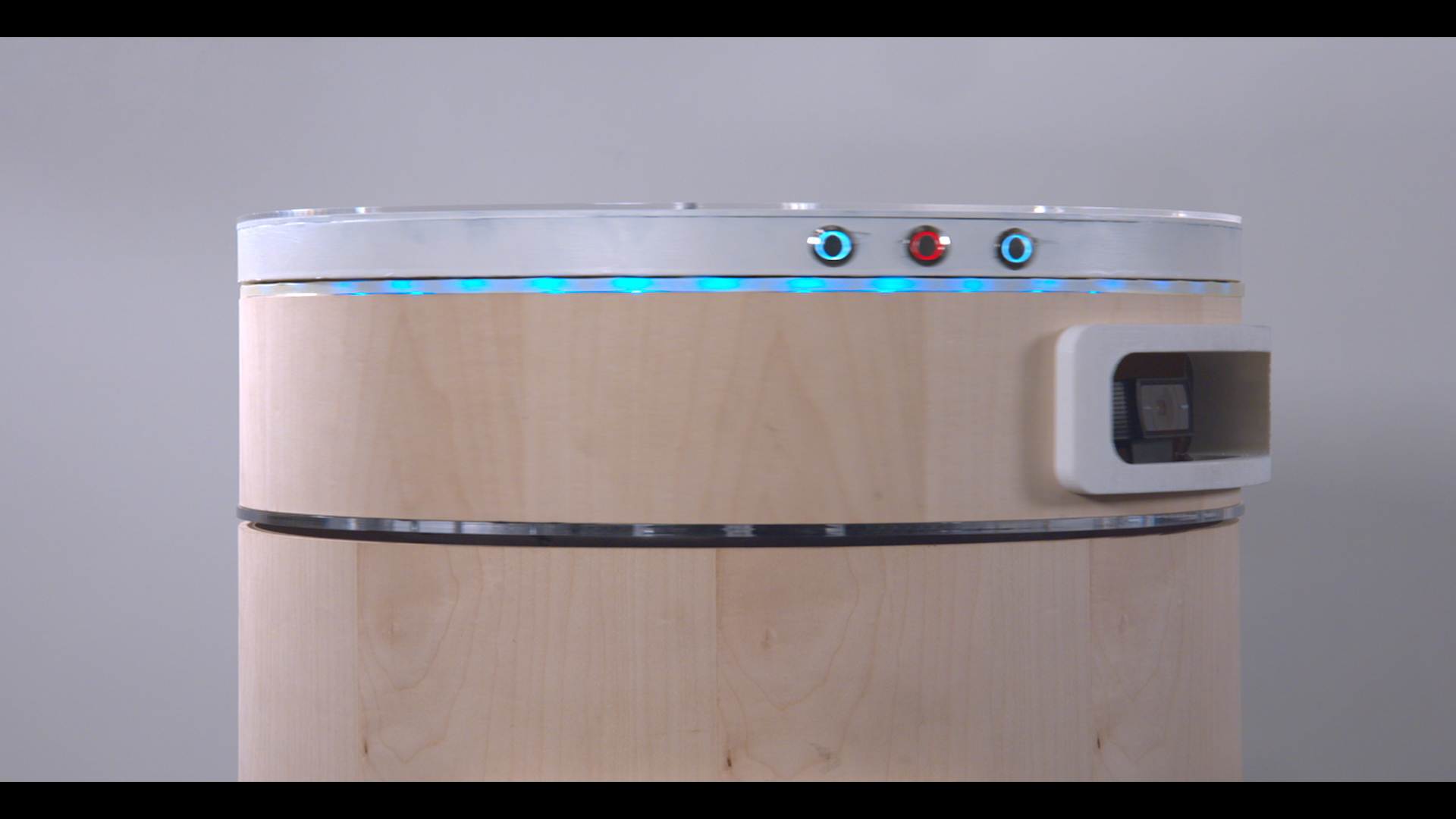

Tbo is a beautiful side table for your sofa, made from plywood cylinders with a maple veneer coating. But it is also a highly functional robot; it moves around, generates a 3D point cloud map of a room, boasts great sound acoustics, and starts projecting on a wall a friend of yours through Google Hangouts while its Full HD camera captures a crystal clear video of you. Under the hood, it is powered by an Intel NUC running ROS, with a Raspberry Pi set up as a ROS node for GPIO.

The development of Tbo was to research multiple topics in Human-Robot Interaction; experimenting with ideas on telepresence, robotic furniture and human responses to mobilization of stationary objects. In addition, the development of Tbo opens up research topics in computer vision, SLAM (specifically map sharing), omnidirectional audio reception, and many others.

The TableBot project was directed under the auspices of Brown University's Humanity Centered Robotics Initiative.

The research paper on tbo has been accepted!

It will be presented on the 2020 ACM/IEEE International Conference on Human-Robot Interaction at Cambridge, United Kingdom on March 2020.

Features

Situated Robotics

AI Virtual Assistant

Telepresence

Localization, Mapping and Movement

Innovative robot control interface using a rotating tabletop

Movable, independent Full HD camera and projector for video conferencing using Google Hangouts

Sturdy weight dissipation design allowing perfect functionality as a table

Integration of the Watson API for voice commands

Omnidirectional microphone

Ambient LED Status Light and great sounding audio (nickname: PartyBot)

Easy-to-remove outer shell design

Vast additional development area

Tbo Components in Assembling Order

Situated Robotics

Tbo is the first of a class of robots we are developing that we call “Situated Robots”. Our goal is to develop a class of robots that “hide in plain sight” as furniture and other similar objects, situated within the built environment. We’re interested in questions that an architect, for example, might be interested in; questions of spatiality and human scale. But we are also interested in making the interface disappear as much as possible.

We think that robots will become increasingly weaved into the fabric of the built environment; integrated directly into the architecture and furniture. We want to create robots that people don’t think of as robots, but as everyday objects first and foremost. These objects can become portals where users might access the internet or interact with others through telepresence, for example.

This led to a few design ideologies in making Tbo:

It must have a natural control interface for the user so that interacting with Tbo would be similar / intuitive as to how a table would be moved

It needed to function perfectly as a table

And of course, it needed to look like a table while its extra functionalities are presented in a obvious manner.

By using an all-wood cylindrical outer shell, the robot could keep its look of a table, while the additional 3D printed lips and the acrylic that outlines the edges of different strata hint at the different functionality that lies behind the shells.

AI Virtual Assistant

Tbo is also a new kind of integrated virtual assistant platform, which we hope to develop further into a whole family of smart objects.

Unlike Google Home or Alexa, which are spatially fixed, Tbo is mobile and situated within its environment in ways that expand its functionality considerably.

We envision Tbo to become the base station of multiple other products in the line of situated robotics. For example, tbo can be the scanner of a space, sharing the 3D map of the room with other situated robots, all connected. It can complete difficult computation tasks and let other in-house devices to be more power efficient, and cheaper to build.

Telepresence

In addition to their ability to move about the space, the user can “beam into” these objects, giving them the ability to create connections between spaces and people. Because Tbo is mobile and is equipped to project onto almost any surface, the experience is unlike other telepresence applications. Using projectors rather than screens, the telepresence experience is less “Skype on a stick” and more of an embodied, immersive experience.

Localization, Mapping and Movement

SLAM is an crucial function for Tbo in completing its autonomy. By generating a 3D point cloud map of the room, Tbo is able to autonomously navigate from point A to B, as well as go into its charging dock. A highly accurate autonomous drive system is crucial for expanding Tbo's capabilities, and in extension other situated robots', capabilities.

Specifications

Round Cylinder with a 18" diameter and 25" height

Around 10-15kg in weight

Intel NUC running Ubuntu as the master ROS node

Raspberry PI w/ Motor HAT as a ROS node

Asus Xtion Pro for SLAM

Logitech C920 Webcam

AAXA P300 Pico Projector

MAXOAK 50000mAh Power Bank

12V motor powering the camera strata

4" Wall Mount Speaker with a 100W Amp

Future Research Topics / Improvements

Hardware:

An omnidirectional drive system

3-axis webcam mount

Addition of a 360 degree LIDAR

Higher density LED strip

Integration of photo interrupters for fully automatic startup sequence

Better power management

Software:

Increased integration of Watson API into ROS for more automation

Optimal projection wall detection

Higher SLAM accuracy and resolution using LIDAR

Computer vision for detecting currently talking person

Team

Jung Yeop (Steve) Kim – Project Lead / Hardware Design

McKenna Cisler – Movement and Interaction

Jonathan Lister – SLAM and Video Conferencing

Benjamin Navetta – Watson / Voice Command

Horatio Han, Marianne Aubin Le Quere, Beth Phillips, Maartje de Graaf, Ethan Mok, Judy Kim, Peter Haas

Ian Gonsher - Principal Investigator

Contact

Please email {kimjungyeop, ian_gonsher, peter_haas} @ brown.edu.